Humans have evolved to make fast decisions. For example, when a stranger approaches you, you know almost instantaneously if they represent a threat, if they are angry, friendly or happy. The cognitive processing involved is mind-boggling if we actually stopped to think about it (which we don’t).

We subconsciously process a myriad of information, such as the person’s stance and their facial expression, and make a decision in a split second about whether this stranger represents a threat or opportunity. Or, in the language of evolution, we decide “Which one of us is lunch?” and “What am I going to do about it?”

The key point here is we subconsciously process this information: it is an instinctive process rather than a conscious evaluation. The cognitive psychologists Daniel Kahnemann and Amos Tversky would call this System 1 decision-making, where decisions involve characteristics such as fast, automatic, frequent, emotional, stereotypic and subconscious, as described in the seminal bestseller Thinking Fast and Slow (Kahnemann, 2011).

However, these types of decisions are subject to cognitive biases, such as over-optimism, loss aversion, the way a question is worded (“framing”), substitution of simpler questions, or more readily available answers to different yet similar questions.

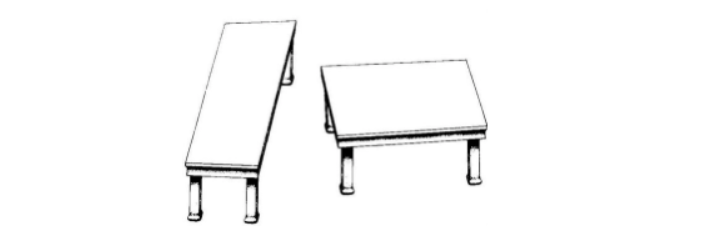

For example, which one of the following tables is longer?

The one on the left, obviously.

That was your System 1 jumping in and deciding for you. Now let your System 2 brain – the one that deals with slow, effortful, infrequent, logical, conscious calculations – go and fetch a ruler and measure the lines. They are the same length.

This is a simple optical cognitive bias. We humans have many other cognitive biases around decision-making, which makes us prone to error, sometimes in life-threatening situations, like flying planes and diagnosing cancer. Most frequently, though, these errors occur in making business decisions based on the evidence (data) at hand.

The discipline that studies the effects of psychological factors on (economic) decisions, is called behavioral economics. Behavioral economics broke into mainstream thinking several years ago, and it’s becoming a hot topic in a variety of industries. With the Nobel Prize for Economic Sciences awarded to behavioral economist Richard Thaler this year, behavioral sciences gained another spike in interest.

We at BiZZdesign believe the lessons learned in behavioral economics can benefit the world of Enterprise Architecture as well.

When making strategic decisions at the enterprise level, it is incredibly important to be aware of these biases in order to avoid making suboptimal decisions, which can be very costly. Making fact-based decisions, and understanding key decision criteria, is key to successful enterprise transformation.

This blog series aims to help organizational designers leverage human biases to steer decision-makers into making even better decisions. In the next two blog posts in this series, we will use examples from famous behavioral economists and apply them to the world of Enterprise Architecture. Finally, we will discuss the possibility and need of minimizing –or even ruling out – human biases.

Stay tuned!