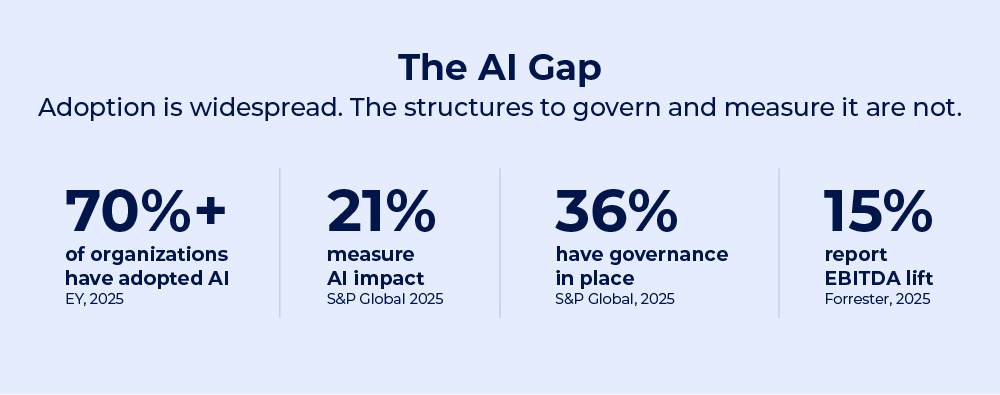

Enterprises are investing billions into artificial intelligence, yet most still struggle to show what they gained from it. Recent Forrester research reveals only 15% of AI decision-makers reported a positive impact on profitability in the past 12 months, and fewer than one-third can link AI outputs to concrete business benefits. The gap between expectations and reality has become so wide that Forrester predicts a market correction, with enterprises deferring 25% of planned 2026 AI spend into 2027.

The signal is hard to ignore: the value hasn’t landed yet.

For technology leaders, this creates a strategic dilemma. Pause – or slow down – AI investment, and you risk falling behind competitors who successfully manage to operationalize AI. Or continue spending while hoping to establish clear line of sight to ROI, and you're risking budgets, credibility, and shareholder confidence. The pressure is on, and neither option is comfortable.

Analysts are largely aligned that organizations should ignore hype, concentrate on tangible outcomes, and reinforce the foundations that ensure AI delivers value: visibility into how AI connects to existing systems and processes, governance to evaluate what's working, and alignment between AI investments and strategic priorities. But what does that mean in practice?

The Root Causes of AI Project Failure

The last two years were about running experiments and testing hypotheses. Now organizations face a fundamentally different challenge: deciding which ones to scale and building the governance to scale it safely.

Most organizations haven't made that shift yet. Experimentation is inherently risky, and many POCs will fail. That's expected. The real issue is whether organizations have the right approach to fail fast with limited cost, the governance to evaluate objectively what's working, and the discipline to make evidence-based decisions about what to scale.

The key is to test the key hypotheses, capturing evidence for desirability (“does this create value for users or customers?”), feasibility (“can we actually deliver this in a real-world situation?”), and viability (“can we run this profitably in production?”). Each test creates learnings that help inform the decision to continue to invest resources to learn more, or kill the experiment to limit exposure to ideas with low probability of success.

The breakdown often happens at the organizational level: in how AI projects are selected, whether there's governance to evaluate what's working, how initiatives relate to existing enterprise capabilities and assets (e.g. data), and whether outcomes are measured objectively in terms of business benefits and P&L impact, with line of sight to strategic goals.

Scaling is expensive and high-commitment, so the stakes are much higher than in experimentation; scaling the wrong initiative wastes far more resources than killing ten experiments early.

Yet most organizations lack the foundation to make those decisions well. EY’s 2025 research shows that while more than 70% of organizations say they have scaled or integrated AI, only about a third report having the governance protocols needed to guide or evaluate the work. S&P Global’s 2025 analysis points in the same direction, with just over one-third of companies reporting an AI policy and only 21% saying they measure the impact of their AI initiatives.

In this environment, AI investments often proliferate in silos. Marketing launches a chatbot. Finance experiments with forecasting models. IT pilots automation. Each initiative may show promise in isolation, but they necessarily don't add up to strategic value.

The result is predictable. Fragmentation grows, duplication increases, and technical debt accumulates. As siloed projects proliferate, keeping track of data dependencies, data quality, functional duplication and compliance status becomes exponentially harder. Recent Gartner analysis found that nearly two-thirds of organizations lack the data management practices needed for AI, making issues like this even more difficult to trace. When something goes wrong, teams struggle to identify the cause, understand the upstream dependencies and downstream impacts, or determine whether an issue stems from data, integration, compliance, or design.

Ultimately, the failure points sit in how transformation is organized and executed across the business, and in particular the transition from exploration (experimentation) to exploitation (scaling what works).

The Missing Layer: Visibility and Governance

Three foundational gaps consistently undermine AI outcomes. At their core, they stem from the same issue: organizations lack the coherent semantic model of their enterprise that AI needs to be meaningful. Without a clear representation of how capabilities, processes, systems, and data interact, AI produces generic outputs disconnected from business reality.

1. Limited visibility of the IT and business landscape

AI does not operate in isolation. It draws on data sources, processes, applications, infrastructure, and regulatory requirements. Leaders routinely underestimate these connections. What appears to be a straightforward use case — automating invoice processing, for example — might touch six legacy systems, three data sources, and two compliance frameworks. When teams can't see these dependencies, they can't assess risk, estimate effort, or predict impact. Without visibility, even simple projects run into delays, overspend, and rework.

2. Weak governance structure

Effective governance is not a barrier to innovation; it is a prerequisite for scaling it. It's about decision rights, accountability, and measurement. Who approves new AI investments? How are trade-offs between innovation and risk managed? What metrics define success? Governance requirements differ depending on the phase: experiments need speed and the discipline to kill what isn't working; production initiatives require security, compliance review, and accountability for ROI.

Organizations that lack clear decision rights, evaluation criteria, and accountability mechanisms — or apply the wrong governance model to the wrong phase — tend to accumulate AI experiments without a path to operationalization. This creates AI sprawl with dozens of uncoordinated experiments that never mature into enterprise capabilities.

3. Misalignment between strategy and execution

Even when AI investments are individually sound, they deliver limited value when they are not connected to broader strategic objectives. A retailer might deploy AI for demand forecasting while simultaneously running a separate initiative to optimize supply chain logistics, missing the opportunity to integrate both efforts into a single, more powerful capability. These missed synergies add up over time.

Each of these gaps is a consequence of the same underlying issue. AI is not failing because it cannot generate value. It is failing because organizations lack sufficient visibility of the broader operating context required to deploy it for maximum impact. Without visibility, governance, and alignment, even well-funded AI initiatives may deliver only a fraction of their potential value. Transformation doesn't flow, it stalls in silos.

4 Steps to Improve AI ROI and Governance

Making this shift requires discipline, not disruption. Four concrete steps provide the foundation.

Start with visibility.

Evaluate the current landscape before introducing new AI initiatives. What data sources exist? How good is the data quality? Which applications and processes would be affected? Where are the dependencies, risks, and opportunities? Organizations that invest in understanding their IT landscape — through architecture repositories that connect business activities with data, technology and change initiatives — can make better decisions about where AI can add most value. Application Portfolio Management reinforces this by providing a clear view of applications, ownership and dependencies, which helps teams understand how AI initiatives will interact with the current landscape and where obstacles may arise.

Establish governance that scales.

AI governance doesn't need to be bureaucratic, but it does need to be explicit. Define who evaluates AI investments, and how (balancing criteria such as ROI, speed, risk, strategic fit), and how success will be measured. It’s not a “one size fits all” thing. The type of governance model will almost certainly depend on the type of company, its legal & compliance jurisdiction, the business function in which AI is being used (HR will be very different from product innovation) and the competitive business context. Integrate decisions about AI investments into existing investment and risk management frameworks rather than treating it as a separate domain.

Align AI to strategy.

Every AI initiative should answer a simple question: Which business outcome does this advance, and how will we know if it's working?. Tie AI use cases to benefits for specific capabilities: faster claims processing, reduced churn, optimized inventory. Strategic Portfolio Management strengthens this alignment by linking AI investments to strategic priorities and capabilities, resources and expected benefits so organizations can focus their resources on work that advances strategy rather than wasting resources on areas that don’t move the needle.

Treat AI as part of transformation.

AI projects should follow the same discipline as any other major change initiative—integrated into how the organization plans, designs, and governs change, not bolted onto the side of the business. Solution Architecture Management ensures AI initiatives align with architecture principles, standards and the broader transformation roadmap so they can scale coherently rather than creating fragmentation. When AI investments flow through the same transformation discipline as application rationalization, process optimization, or compliance initiatives, they're more likely to stick and more likely to deliver compounding value.

The Future of AI Transformation: Disciplined Adoption and Measurable Outcomes

Forrester’s predicted market correction signals a shift in how organizations approach AI investment. The next phase will reward organizations that combine ambition with smarter approaches to governance and execution. The ones that succeed will not necessarily spend more; they will have better enterprise-wide visibility, govern more effectively, and align more tightly to strategy.

The good news? The organizations that get this right don't just improve their AI ROI. They build a transformation muscle that pays dividends across all types of transformation, from cloud migration to regulatory compliance to customer experience.

In 2026, the organizations that pull ahead will be the ones that put strong transformation governance around AI, enabling an operating model capable of scaling it.

Let's discuss your AI roadmap.

If you're navigating the tension between AI ambition and ROI accountability, we'd welcome the conversation. Bizzdesign’s Enterprise Transformation Suite helps organizations build the visibility, governance, and alignment needed to make AI investments measurable and sustainable.

FAQs

Many AI initiatives fail not because the technology underperforms, but because organizations lack visibility, governance, and alignment needed to scale successfully. The breakdown happens at the organizational level: in how AI projects are selected, whether there's governance to evaluate what's working, how initiatives relate to existing enterprise capabilities and assets, and whether outcomes are measured objectively in terms of business benefits and P&L impact with line of sight to strategic goals. Without visibility, governance, and alignment,, teams can’t assess dependencies, manage risk, measure outcomes, or make evidence-based decisions about which initiatives to scale.

The challenge is deciding which AI initiatives to scale and building the governance to scale it safely. Organizations need the right approach to fail fast, the governance to evaluate objectively what's working, and the discipline to make evidence-based decisions about what to scale. Without these foundations, AI investments multiply in silos, fragmentation increases, and promising use cases stall. Moving from isolated pilots to enterprise-wide impact requires a shared architectural view, clear governance structures, and the ability to plan, design, and govern AI as part of broader transformation.

Delivering AI value at scale requires three foundational elements:

- Visibility into the enterprise landscape — systems, processes, data flows, risks, dependencies.

- Governance that defines clear decision rights, evaluation criteria, and accountability for outcomes.

- Alignment between AI investments and strategic business priorities.

These elements create the conditions for AI to move from isolated pilots to enterprise-wide capability rather than producing disconnected efforts that fail to deliver value. Bizzdesign’s Enterprise Transformation Suite gives organizations the visibility, governance, and alignment needed to move AI from isolated pilots to sustainable, enterprise-wide impact.

The strongest AI use cases to scale are the ones where teams have a clear line of sight into how the initiative connects to the rest of the business. Companies should prioritize use cases that:

- Align directly with strategic objectives, rather than emerging from isolated experimentation

- Have clear visibility into the systems, processes, and data they rely on, so dependencies and risks are understood upfront

- Fit within existing governance structures, allowing teams to evaluate impact, effort, and accountability

- Include defined success criteria, making it possible to measure outcomes once deployed

Selecting use cases without understanding dependencies or strategic relevance leads to fragmented efforts, overlapping pilots, rising technical debt, and limited ROI — which is why many promising initiatives never reach operational scale.

Enterprise architecture gives organizations the visibility they need to understand how AI connects to existing systems, processes, data flows, and risks. A shared architectural view helps teams see dependencies upfront, avoid overlaps, and prevent the fragmentation that causes pilots to stall. Enterprise architecture also helps ensure AI initiatives align with strategic priorities and can be governed consistently across the business, turning isolated experiments into scalable enterprise capabilities. By providing the structural context for decision-making, EA enables AI investments to deliver measurable value and supports the shift from experimentation to scaling what works.